Hi @aaime-geosolutions ,

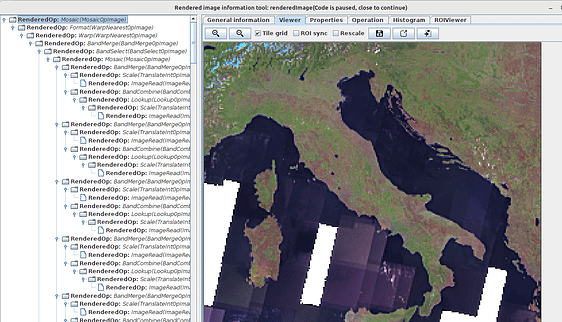

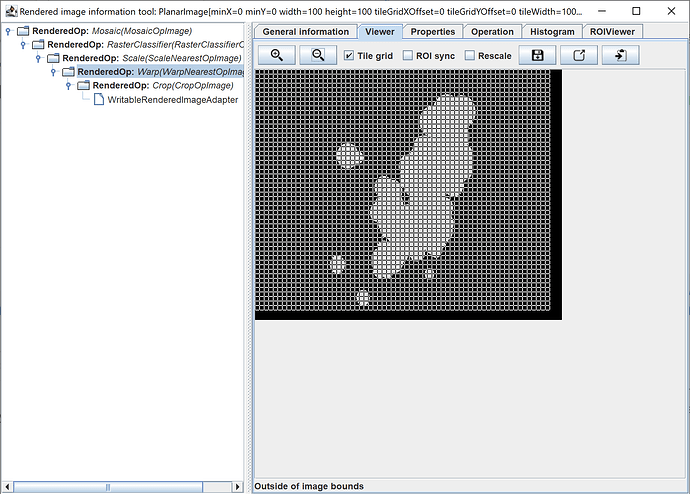

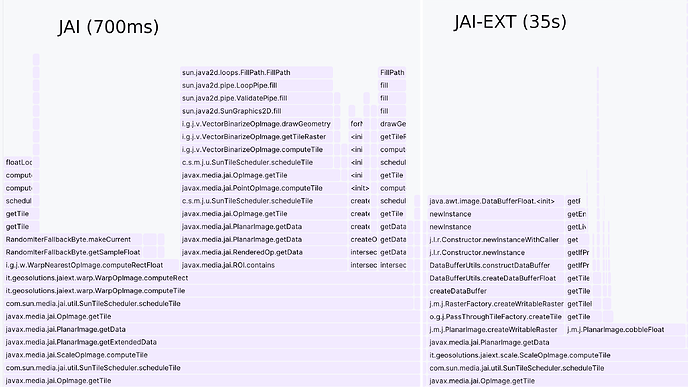

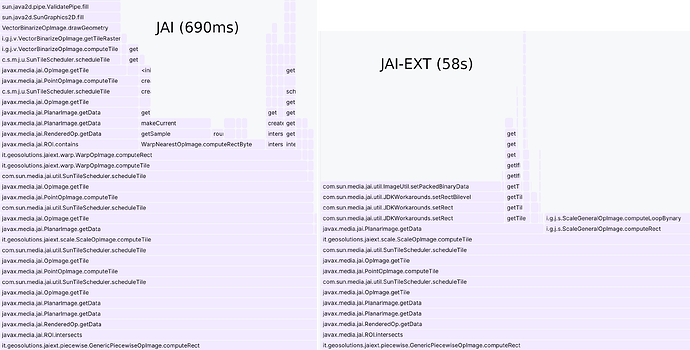

wow, thanks so much for the idea with the chain analyzer GUI. It worked perfctly out of the box. Here you can see the very typical operation stack for our data:

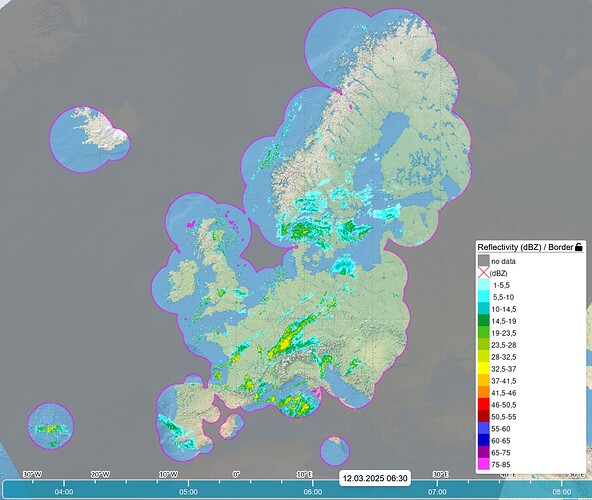

We’re always starting off with some writable raster (usually a buffered image) that holds the data itself. And then all the rest of the chain is what GeoServer is doing with the data: 1) crop by 2 pixel in y-direction, 2) warp, 3) scale, 4) classify, 5) mosaic. It’s always like that.

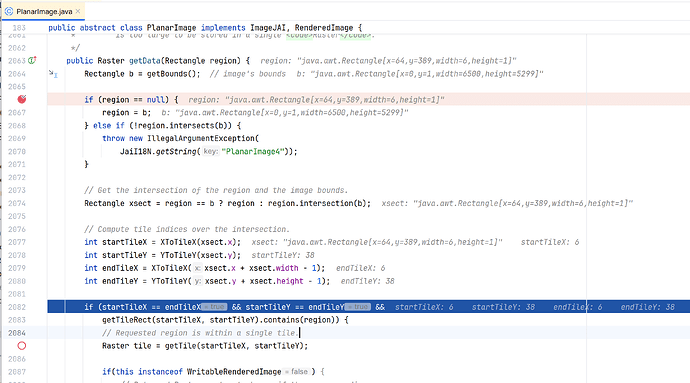

Here’s the dump of the op-stack:

JAI op: Mosaic(it.geosolutions.jaiext.mosaic.MosaicOpImage) at Level: 0, offset:0, 0, size:100 x 100, tile size:100 x 100

Params. Parameter 1:MOSAIC_TYPE_OVERLAY; Parameter 2:null; Parameter 3:[javax.media.jai.ROI@3f04f371]; Parameter 4:[[0.0]]; Parameter 5:[1.0]; Parameter 6:[RangeDouble[-Infinity, 0.0)];

Bands: 1, type: Byte; Color model:class java.awt.image.IndexColorModel, transparency: Translucent

Tile cache: it.geosolutions.concurrent.ConcurrentTileCacheMultiMap@222e782

Tile scheduler: com.sun.media.jai.util.SunTileScheduler@543ce332<global>, parallelism 20, priority 5

Number of sources: 1

JAI op: RasterClassifier(it.geosolutions.jaiext.classifier.RasterClassifierOpImage) at Level: 1, offset:34, 29, size:31 x 42, tile size:31 x 42

Params. Parameter 1:[Domain description:

name= no data

input range=RangeDouble(-Infinity, -990.0)

output range=RangeDouble[0.0, 0.0]

colors=java.awt.Color[r=133,g=133,b=133], Domain description:

name=(dBZ)

input range=RangeDouble[-990.0, 1.0)

output range=RangeDouble[1.0, 1.0]

colors=java.awt.Color[r=0,g=0,b=0], Domain description:

name= 1-5,5

input range=RangeDouble[1.0, 5.5)

output range=RangeDouble[2.0, 2.0]

colors=java.awt.Color[r=153,g=255,b=255], Domain description:

name= 5,5-10

input range=RangeDouble[5.5, 10.0)

output range=RangeDouble[3.0, 3.0]

colors=java.awt.Color[r=51,g=255,b=255], Domain description:

name= 10-14,5

input range=RangeDouble[10.0, 14.5)

output range=RangeDouble[4.0, 4.0]

colors=java.awt.Color[r=0,g=202,b=202], Domain description:

name= 14,5-19

input range=RangeDouble[14.5, 19.0)

output range=RangeDouble[5.0, 5.0]

colors=java.awt.Color[r=0,g=153,b=52], Domain description:

name= 19-23,5

input range=RangeDouble[19.0, 23.5)

output range=RangeDouble[6.0, 6.0]

colors=java.awt.Color[r=77,g=191,b=26], Domain description:

name= 23,5-28

input range=RangeDouble[23.5, 28.0)

output range=RangeDouble[7.0, 7.0]

colors=java.awt.Color[r=153,g=204,b=0], Domain description:

name= 28-32,5

input range=RangeDouble[28.0, 32.5)

output range=RangeDouble[8.0, 8.0]

colors=java.awt.Color[r=204,g=230,b=0], Domain description:

name= 32,5-37

input range=RangeDouble[32.5, 37.0)

output range=RangeDouble[9.0, 9.0]

colors=java.awt.Color[r=255,g=255,b=0], Domain description:

name= 37-41,5

input range=RangeDouble[37.0, 41.5)

output range=RangeDouble[10.0, 10.0]

colors=java.awt.Color[r=255,g=196,b=0], Domain description:

name= 41,5-46

input range=RangeDouble[41.5, 46.0)

output range=RangeDouble[11.0, 11.0]

colors=java.awt.Color[r=255,g=137,b=0], Domain description:

name= 46-50,5

input range=RangeDouble[46.0, 50.5)

output range=RangeDouble[12.0, 12.0]

colors=java.awt.Color[r=255,g=0,b=0], Domain description:

name= 50,5-55

input range=RangeDouble[50.5, 55.0)

output range=RangeDouble[13.0, 13.0]

colors=java.awt.Color[r=180,g=0,b=0], Domain description:

name= 55-60

input range=RangeDouble[55.0, 60.0)

output range=RangeDouble[14.0, 14.0]

colors=java.awt.Color[r=72,g=72,b=255], Domain description:

name= 60-65

input range=RangeDouble[60.0, 65.0)

output range=RangeDouble[15.0, 15.0]

colors=java.awt.Color[r=0,g=0,b=202], Domain description:

name= 65-75

input range=RangeDouble[65.0, 75.0)

output range=RangeDouble[16.0, 16.0]

colors=java.awt.Color[r=153,g=0,b=153], Domain description:

name= 75-85

input range=RangeDouble[75.0, 85.0)

output range=RangeDouble[17.0, 17.0]

colors=java.awt.Color[r=255,g=51,b=255], Domain description:

name=No data1

input range=RangeDouble[NaN, NaN]

output range=RangeDouble[18.0, 18.0]

colors=java.awt.Color[r=0,g=0,b=0]]; Parameter 2:-1; Parameter 3:javax.media.jai.ROI@3989839b; Parameter 4:null;

Bands: 1, type: Byte; Color model:class java.awt.image.IndexColorModel, transparency: Translucent

Tile cache: it.geosolutions.concurrent.ConcurrentTileCacheMultiMap@222e782

Tile scheduler: com.sun.media.jai.util.SunTileScheduler@543ce332<global>, parallelism 20, priority 5

Number of sources: 1

JAI op: Scale(it.geosolutions.jaiext.scale.ScaleNearestOpImage) at Level: 2, offset:34, 29, size:31 x 42, tile size:100 x 100

Params. Parameter 1:0.004739226; Parameter 2:0.007972344; Parameter 3:34.369297; Parameter 4:29.058283; Parameter 5:InterpolationNearest; Parameter 6:javax.media.jai.ROI@7f1aebc2; Parameter 7:false; Parameter 8:null; Parameter 9:null;

Bands: 1, type: Float; Color model:class java.awt.image.ComponentColorModel, transparency: Opaque

Tile cache: it.geosolutions.concurrent.ConcurrentTileCacheMultiMap@222e782

Tile scheduler: com.sun.media.jai.util.SunTileScheduler@543ce332<global>, parallelism 20, priority 5

Number of sources: 1

JAI op: Warp(it.geosolutions.jaiext.warp.WarpNearestOpImage) at Level: 3, offset:0, 1, size:6500 x 5299, tile size:100 x 100

Params. Parameter 1:javax.media.jai.WarpGrid@41fb4e3d; Parameter 2:InterpolationNearest; Parameter 3:[NaN]; Parameter 4:javax.media.jai.ROI@d01a0bb; Parameter 5:null;

Bands: 1, type: Float; Color model:class java.awt.image.ComponentColorModel, transparency: Opaque

Tile cache: it.geosolutions.concurrent.ConcurrentTileCacheMultiMap@222e782

Tile scheduler: com.sun.media.jai.util.SunTileScheduler@543ce332<global>, parallelism 20, priority 5

Number of sources: 1

JAI op: Crop(it.geosolutions.jaiext.crop.CropOpImage) at Level: 4, offset:0, 1, size:6500 x 5299, tile size:6500 x 5300

Params. Parameter 1:0.0; Parameter 2:1.0; Parameter 3:6500.0; Parameter 4:5299.0; Parameter 5:null; Parameter 6:null; Parameter 7:[0.0];

Bands: 1, type: Float; Color model:class java.awt.image.ComponentColorModel, transparency: Opaque

Tile cache: it.geosolutions.concurrent.ConcurrentTileCacheMultiMap@222e782

Tile scheduler: com.sun.media.jai.util.SunTileScheduler@543ce332<global>, parallelism 20, priority 5

Number of sources: 1

Non op: class javax.media.jai.WritableRenderedImageAdapter at Level: 5, offset:0, 0, size:6500 x 5300, tile size:6500 x 5300

Bands: 1, type: Float; Color model:class java.awt.image.ComponentColorModel, transparency: Opaque

Tile cache: null

Tile scheduler: null

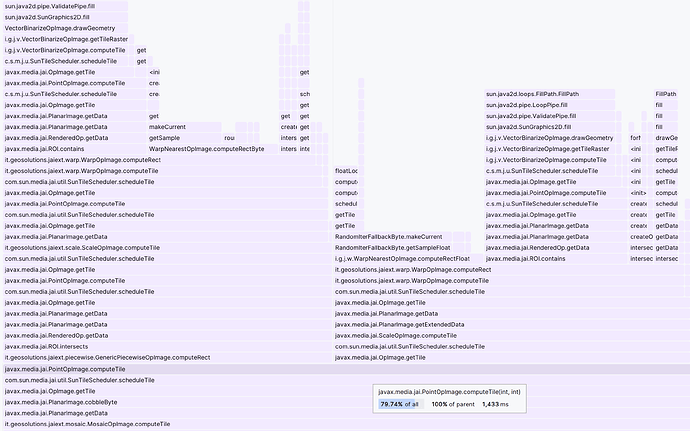

I am not expecting anyone to analyze the stack and point me to our problem, but it gives myself a good tool to figure out what might go wrong.

Btw, switching from JAI-EXT to JAI, won’t make a single difference in this above chain (except of course of the class of the scaleOp).

@aaime-geosolutions, thanks for taking a look at Scale of JAI-EXT. I agree with you that making that single change in JAI-EXT will probably not do. There must be more to it, if indeed the problem is there.

My ideas still is to try making a reproducable Junit that shows the problem. It’s just not that easy to make, because of all the parameters, properties and rendering hints that have to be passed into all the operations involved in the stack. I will try further.

One last question: Is there a test-example somewhere (presumably in WMS) that allows me to directly do the GetMap and infact return that rendered image? I tried to find some, but wasn’t yet able to find some good example.

Thanks!!